Table of Contents

- Using Semantic Search to Understand Customer Search Queries and Need States

- The Challenge

- The Approach: Leveraging Semantic Search

- Impact on Customer Personalization

- Conclusion

- Resources

Using Semantic Search to Understand Customer Search Queries and Need States

In today’s data-driven landscape, understanding customer needs is crucial to delivering personalized experiences. In Marketing defined persona’s exist, otherwise known as need states, which are used to identify the core reasons why consumers would like to buy your products. Once you understand your customers needs you can then try mapping these to need states which allows a more complete understanding of your customer base.

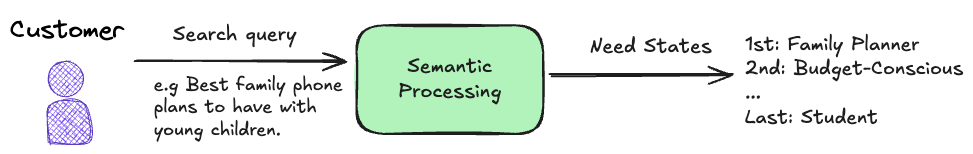

One way to approach this challenge is by performing semantic search on customer search queries to map them against customer need states. This method helps us better capture the intent behind each query, driving more relevant outcomes for both businesses and consumers.

The Challenge

Traditionally, matching search queries to customer needs was done using keyword-based systems. However, this approach often fails to account for the nuances of human language, such as synonyms, contextual meanings, or more complex queries. Customers might express the same need in a variety of ways, making it hard for standard systems to deliver accurate results. This gap motivated us to explore a more advanced solution.

The Approach: Leveraging Semantic Search

Semantic search takes customer queries to the next level by analyzing the meaning behind the words, rather than relying on an exact match. We implemented a system that aligns search terms with underlying customer need states, which are personas capturing a set of customer motivations, behaviors, and expectations.

To achieve this, we used advanced natural language processing (NLP) models that identify and interpret the intent behind different queries. By doing so, our system can match customers with the most relevant need state, whether they use industry jargon or casual language. This approach not only bridges the gap between diverse search queries but also ensures more accurate, personalized results.

Libraries

We decided to use faiss developed by Meta’s Fundamental AI Research group, check here. It provides powerful similarity search algorithms that we can use out of the box. The sentence_transformers library is also useful since we are dealing with search queries which can typically be in the form of short sentences. Converting these into embedding vectors is a critical part of the search and this can be performed by sentence_transformers, see here. numpy and pandas are used for standard data-preprocessing and computation.

import faiss

from sentence_transformers import SentenceTransformer

import numpy as np

import pandas as pd

Code Implementation

The idea here is to define some functionality that flexibly allows us to embed and add data to a corpus then perform similirarity search on the defined corpus and an input query. Making a class for this makes sense as there is a logical grouping aswell as making things more modular and maintainable/extensible down the line.

class SemanticSearch:

"""

A class for performing semantic search using sentence embeddings and FAISS.

Parameters

----------

model_name : str, optional

The name of the SentenceTransformer model to use.

Default is 'all-MiniLM-L6-v2'.

Attributes

----------

model : SentenceTransformer

The SentenceTransformer model used for encoding texts.

indices : dict

A dictionary of FAISS indices for each corpus.

embeddings : dict

A dictionary of embeddings for each corpus.

data : dict

A dictionary of original data for each corpus.

"""

def __init__(self, model_name='all-MiniLM-L6-v2'):

self.model = SentenceTransformer(model_name)

self.indices = {}

self.embeddings = {}

self.data = {}

def add_corpus(self, corpus_name, corpus_data):

"""

Adds a corpus to the semantic search system.

Parameters

----------

corpus_name : str

The name of the corpus.

corpus_data : list of str

The data (texts) of the corpus.

Returns

-------

None

"""

embeddings = self.model.encode(corpus_data, convert_to_numpy=True)

# Normalize embeddings for cosine similarity

faiss.normalize_L2(embeddings)

index = faiss.IndexFlatIP(embeddings.shape[1])

index.add(embeddings)

self.indices[corpus_name] = index

self.embeddings[corpus_name] = embeddings

self.data[corpus_name] = corpus_data

def search(self, query_texts, target_corpus, k=1, threshold=None):

"""

Searches for the most similar texts in the target corpus to the given query texts.

Parameters

----------

query_texts : list of str

The list of query texts.

target_corpus : str

The name of the corpus to search in.

k : int, optional

The number of top results to return. Default is 1.

threshold : float, optional

The minimum similarity score to consider. If None, all results are returned.

Returns

-------

list of list of tuple

A list where each element corresponds to a query text and contains

a list of tuples (matched_text, similarity_score).

"""

# Encode and normalize query texts

query_embeddings = self.model.encode(query_texts, convert_to_numpy=True)

faiss.normalize_L2(query_embeddings)

index = self.indices[target_corpus]

# Perform the search

distances, indices = index.search(query_embeddings, k)

results = []

for i in range(len(query_texts)):

res = []

for j in range(k):

sim = distances[i][j] # Cosine similarity

idx = indices[i][j]

if threshold is None or sim >= threshold:

res.append((self.data[target_corpus][idx], sim))

results.append(res)

return results

# Initialize the semantic search

semantic_search = SemanticSearch()

# Assume 'loaded_list' contains your search queries (list of strings)

# Assume 'needslist' contains your need states (list of strings)

# Add corpora to the semantic search

semantic_search.add_corpus('search_queries', loaded_list)

semantic_search.add_corpus('need_states', needslist)

- Model Selection: Uses

SentenceTransformerfor encoding texts into embeddings, leveraging pre-trained models like ‘all-MiniLM-L6-v2’ for fast, efficient and accurate semantic understanding, see here. This can easily be switched out for alternatives; the following might be better in this case, check this. - Normalization: Embeddings are normalized using

faiss.normalize_L2to ensure cosine similarity calculations are accurate and consistent. Cosine similiarity is used to ensure the choosen threshold has more meaning, this explains how it works. - Indexing: Utilizes FAISS (

faiss.IndexFlatIP) for exact brute force similarity search, allowing fast retrieval of top-k similar items based on cosine similiarity. Other options are available but potentially less accurate, check here for a guide on how to choose an index along with this for more info. - Corpus Management: Maintains separate indices, embeddings, and original data for each corpus, enabling flexible and scalable handling of multiple datasets.

- Threshold Filtering: Allows optional thresholding on similarity scores to filter out less relevant results, enhancing the quality of search results.

- Versatile Search: Supports searching both ways (need states to search queries and vice versa), demonstrating the flexibility of the search functionality.

Example 1: Given a need state, find top k closest search queries

For exploratory analysis it can be worthwhile seeing the top customer search queries for each need state. This enables a greater understanding of what types of search queries you can expect to link to a need state allowing you to change your need states if it’s not capturing the search queries you’d like.

# Parameters

threshold = 0.8 # Adjust the threshold as needed

k = 3 # Number of top results to retrieve

results_need_to_queries = semantic_search.search(

query_texts=needslist,

target_corpus='search_queries',

k=k, # Number of closest search queries to retrieve

threshold=threshold

)

# Create a DataFrame with need states and their top k search queries

need_states = needslist

top_search_queries_list = []

similarity_scores_list = []

for res in results_need_to_queries:

top_queries = [item[0] for item in res] # Extract the search queries

similarity_scores = [item[1] for item in res] # Extract similarity scores

top_search_queries_list.append(top_queries)

similarity_scores_list.append(similarity_scores)

# Create the DataFrame

df_need_to_queries = pd.DataFrame({

'need_state': need_states,

'top_search_queries': top_search_queries_list,

'similarity_scores': similarity_scores_list

})

# Display the first few rows of the DataFrame

print(df_need_to_queries.head())

Example 2: Given a search query, find the closest need state

This is the ultimate aim as it allows you to tie a customer search query to the closest matching need state.

# Parameters

threshold = 0.8 # Adjust the threshold as needed

k = 1 # Number of top results to retrieve

results_query_to_need = semantic_search.search(

query_texts=loaded_list,

target_corpus='need_states',

k=k, # Only the closest need state

threshold=threshold

)

# Create a DataFrame with search queries and their closest need state

search_queries = loaded_list

closest_need_states = []

similarity_scores = []

for res in results_query_to_need:

if res:

closest_need_states.append(res[0][0]) # The need state text

similarity_scores.append(res[0][1]) # The similarity score

else:

closest_need_states.append(None)

similarity_scores.append(None)

# Create the DataFrame

df_query_to_need = pd.DataFrame({

'search_query': search_queries,

'closest_need_state': closest_need_states,

'similarity_score': similarity_scores

})

# Display the first few rows of the DataFrame

print(df_query_to_need.head())

Impact on Customer Personalization

By integrating semantic search, we have been able to significantly improve how customer queries are mapped to need states. This allows businesses to provide more tailored search results, product recommendations, and solutions that align with what customers are actually looking for. This enhanced understanding of customer intent leads to a more personalized experience, increasing satisfaction and conversion rates.

Conclusion

Through the use of semantic search, we’ve been able to better connect customer search queries with the underlying customer need states, enabling more relevant and personalized interactions. As this technology evolves, we look forward to refining our models further to capture even more subtle nuances in customer behavior and intent.

Resources

- Semantic Search Sentence Transformer Post

- Highly useful and easy to digest pragmatic intro to the area of semantic search.