Note: This post is created in conjunction with a discussion on chatbots performed as part of The Education Exchange, a series aimed at teachers to spread AI awareness in the hopes of encouraging further adoption. My hope is that this post highlights the gradual yet recently exponential explosion within the chatbot space and the ways in which these could impact educational institutions.

Table of Contents

- Introduction

- The History of Chatbots

- The Present: Chatbots in Society Today

- The Future of Chatbots: Where Are We Headed?

- Risks & Challenges

- Ensuring Truth & Safety

- Connecting the Dots: Chatbots & Education

- Conclusion

- Further Resources

Introduction

Have you ever asked a virtual assistant on your phone about the weather, or maybe chatted with a pop-up support bot on a website? Those little windows and voice assistants are all examples of chatbots. We often take them for granted nowadays, but they represent a fascinating area of technology that has rapidly advanced in recent years.

At its simplest, a chatbot is just a computer program built to chat with users in some way, whether that’s through text messages, voice commands, or other forms of interaction. It might sound straightforward, but behind the scenes, there’s a lot of clever programming and design that makes these conversations feel natural and seamless.

In education, it’s worth asking: How can these “conversational machines” help teachers and students? In this post, we’ll explore the story of chatbots, from their quirky early days to their present-day sophistication, and see why schools should pay attention. Think of it like following the career of a once-niche musician who suddenly got famous, and now everyone’s wondering if they should book them for the school talent show.

The History of Chatbots

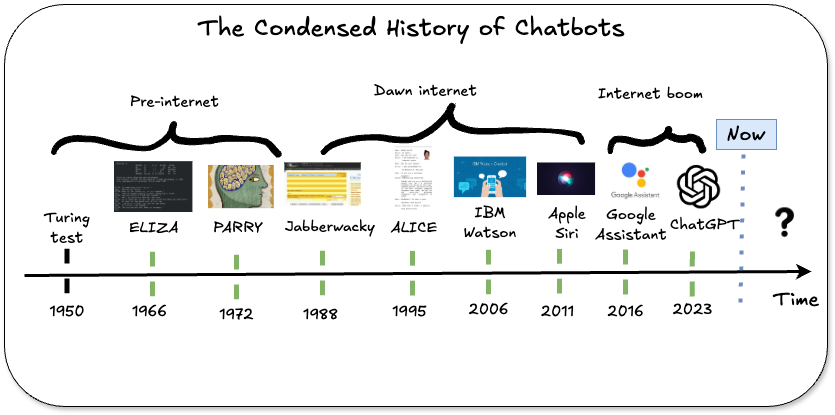

Chatbots weren’t always powered by cutting-edge machine learning. In the mid-1960s, an early program called ELIZA used simple keyword matching and rules to mimic a psychotherapist—often by just rephrasing user statements as questions. For example, if you typed, “I feel sad,” ELIZA might respond, “Why do you feel sad?”. By today’s standards, it was very basic, but it revealed how humans might respond to a computer that seems to understand them.

In 1972, PARRY emerged as a more sophisticated chatbot, often dubbed “ELIZA with attitude”. Created by psychiatrist Kenneth Colby at Stanford University, PARRY simulated the behavior of a person with paranoid schizophrenia. Unlike its predecessor ELIZA, PARRY maintained a consistent personality and backstory, employing nearly five hundred heuristics to generate responses colored by suspicion and mistrust. The program proved remarkably convincing—when tested using a variation of the Turing Test, experienced psychiatrists could only correctly identify PARRY as a computer program 48% of the time, essentially no better than random chance.

Flash forward a few decades, and we started seeing more advanced bots in customer service or online forums. The big leap came with the rise of machine learning and deep learning algorithms that learn patterns from large datasets. Suddenly, chatbots were no longer just rehashing scripted lines. They could generate new, context-aware responses. It’s kind of like going from a cassette tape that plays the same tune over and over to a streaming service that can recommend new tracks based on your mood. Along with these advancements, we now experience highly personalized chatbots available at the customer level, such as ChatGPT and Claude, not just at the enterprise level. These chatbots offer tailored interactions, making them accessible and useful for individual users in various contexts.

To summarize the general historic trends in chatbots:

- 1980 to 2000: Focused on single modalities and the use of statistical techniques to process these modalities.

- 2000 to 2010: Emphasized the conversion of modalities with a focus on computer-human interactions, fueled by the rise of the internet.

- 2010 to 2020: Concentrated on the fusion of modalities, driven by the internet boom and advances in deep learning and neural networks.

- 2020 to present: Aimed at refining and scaling systems using advancements in computational infrastructure.

The Present: Chatbots in Society Today

Chatbots now pop up everywhere—customer support chats, voice-activated assistants like Siri or Alexa, and even online healthcare portals. Here are a few prominent places they show up:

-

Customer Service and Sales: Tired of waiting on hold for your cable company? Many businesses rely on chatbots to answer FAQs or guide customers through troubleshooting steps.

-

Personal Productivity: Some AI assistants can schedule meetings, set reminders, or coordinate your travel. They’re handy for managing day-to-day tasks.

-

Healthcare and Triage: Need a quick check on your symptoms? Certain medical apps use chatbots to ask questions and suggest possible actions—though they can’t replace an actual doctor’s visit.

-

Education: This is where it gets exciting for teachers. We already see AI tutors helping students practice math problems or learn new languages. Tools like Duolingo, for instance, use chatbot-like interactions to teach vocabulary and grammar in bite-sized lessons.

The star of the show these days is Generative AI, an area within AI which focuses on developing models capable of generating new content based on previously learnt content. These models underpin most of the modern chatbot systems replacing older technologies

Large language models like GPT that can produce text, answer questions, and even write (sometimes questionable) poems represent the initial wave of these models. These models represent a giant leap forward in chatbot technologies, because they generate responses on the fly, rather than relying on a more rigid script-like approach.

Though in the real world, humans rarely rely on a single mode of communication. We perceive our environment through various inputs such as sights, sounds, and other sensory inputs, synthesizing this information to understand and react to our surroundings. This is where Multimodal large language models (MMLLMs) come in which aim to emulate this multifaceted approach, enhancing their understanding and response accuracy in real-world applications such as chatbots. MMLLMs build upon LLMs by integrating diverse data types (or modalities) such as text, images, audio, and sensory inputs.

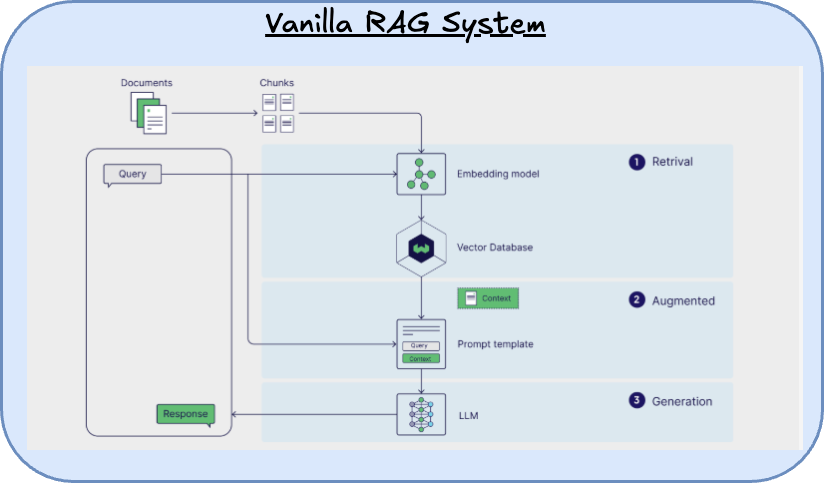

Sometimes you’d want a personalized chatbot that has access to information not available elsewhere. This is where Retrieval-Augmented Generation (RAG) come into play. RAG systems combine the power of large language models with a retrieval mechanism that fetches relevant information from specific datasets or databases. This allows chatbots to provide more accurate and contextually relevant responses by accessing up-to-date or specialized information, making them highly effective for personalized educational tools and other niche applications.

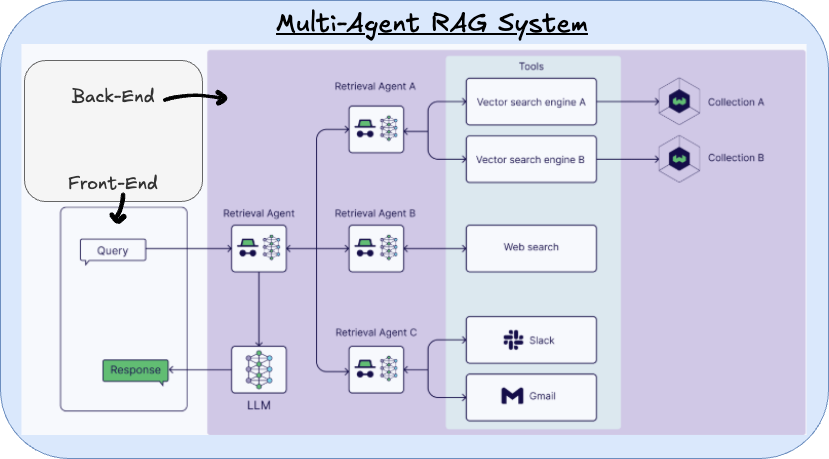

The latest trend is the incorporation of AI agents into chatbots. These systems go beyond simple conversation, enabling chatbots to perform complex tasks somewhat autonomously. AI agents can handle multi-step processes, such as booking travel, managing schedules, or even conducting preliminary research. By integrating these capabilities, chatbots are evolving into versatile digital assistants that can streamline various activities, making them even more valuable in educational settings and beyond. Check out OpenAIs recent operator agent which they’ve released as a feature in their chatbot.

The Future of Chatbots: Where Are We Headed?

So, what’s next? If the present is an era of chatbots that sound convincingly human, the future promises even more:

-

Growing Personalization: Imagine a chatbot that understands each student’s learning style—like a personal tutor that knows if you prefer practicing algebra with word problems or straightforward formulas. AI systems are getting better at adapting to individual needs in real time.

-

Autonomous AI Agents: We might see chatbots that can perform tasks beyond just conversation, such as doing background research, organizing data, or coordinating with other services. Think of them as an incredibly organized, 24/7 teaching assistant.

-

Ethical & Human-Like Interactions: As bots become more lifelike, we’ll have to wrestle with questions of authenticity. Are students interacting with an advanced AI that “feels” empathetic? Or are they still aware it’s a computer program? Balancing the helpfulness of an empathetic-sounding chatbot with transparent communication is going to be crucial.

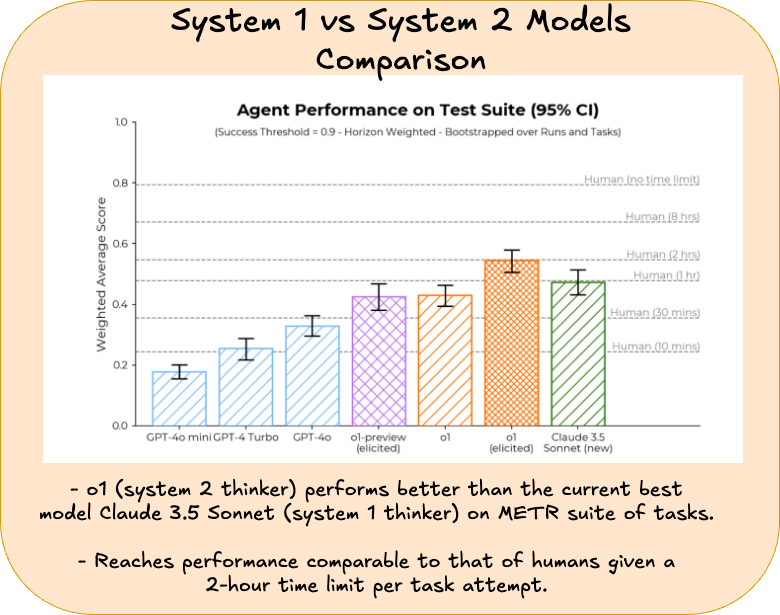

The next frontier in AI development is focusing on enhancing reasoning capabilities. Current models, often referred to as “System 1 thinkers,” excel at fast, intuitive responses but can struggle with complex, logical reasoning. The future aims to integrate “System 2 thinking,” which involves more deliberate, analytical processing. OpenAI’s recent O1 series of models is an example of this shift, designed to improve reasoning and problem-solving skills. This evolution will enable chatbots to handle more sophisticated tasks, such as nuanced decision making and intricate problem solving, making them even more effective and reliable in educational settings and beyond.

Risks & Challenges

Of course, with great power comes great responsibility and chatbots aren’t all sunshine and roses:

-

Misinformation & Hallucinations: Chatbots sometimes get the facts wrong or even invent them (“hallucinations”). A student might ask for a historical date and receive a totally off-base answer if the bot’s training data is incomplete or biased.

-

Data Privacy & Bias: Chatbots learn from data, which can include biased examples. Plus, if they store or handle student information, there’s a need for strict privacy safeguards—nobody wants sensitive personal details floating around the internet.

-

AI Dependency: Could overreliance on chatbots stifle critical thinking? If students (or teachers) rely on an AI to answer every question, they might lose the habit of doing their own research or analysis.

-

Safeguarding Learners: In an educational context, you’d also have to be sure chatbots are age-appropriate. For younger kids, any content filtering or oversight needs to be robust to prevent exposure to harmful or misleading material.

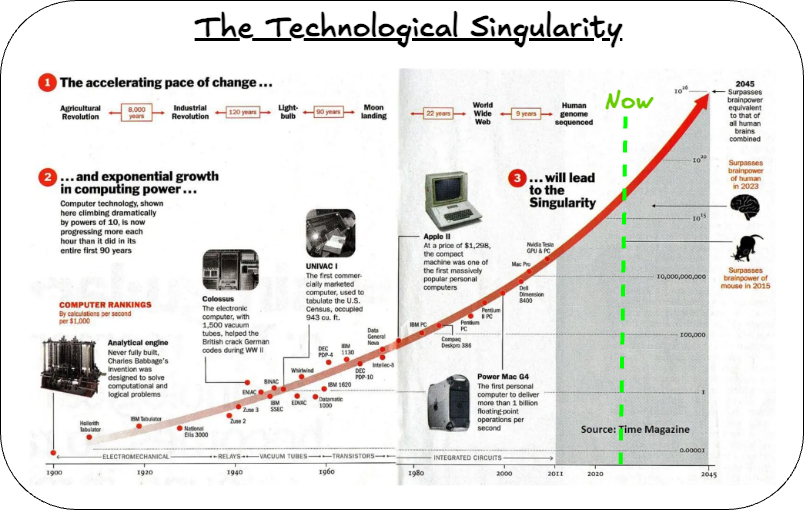

Another significant concern is the potential arrival of the “Technological Singularity”. This is the hypothetical point at which technological systems become more intelligent than humans. The “singularity” was first was coined in this context by Jon von Neumann & later popularised by the likes of Vernor Vinge & Ray Kurzweil (super interesting to watch this video recorded in 2009 of his predictions).

If this point is reached, the risks mentioned earlier could pale in comparison to the new challenges that might arise. The AI Singularity could lead to unprecedented changes in society, with AI systems potentially making decisions beyond human comprehension or control. This could impact everything from job markets to ethical standards, and even the fundamental way we interact with technology. The implications are vast and largely unknown, making it crucial to approach AI development with caution and foresight.

Many who argue against the likelihood of reaching “The Singularity” often cite that humans possess something AI does not: consciousness, sentience, or subjective experience. When asked to define sentience, these skeptics typically respond with, “I don’t know, but they don’t have it,” which can seem like an inconsistent position. To tackle this, it might be more effective to focus on subjective experience, as it is a more tangible aspect of consciousness. If we can demonstrate that AI systems have subjective experiences, it could shift the conversation and make people reconsider their stance on sentience. This approach could help bridge the gap in understanding and address the deeper concerns about AI’s potential impact on society.

Note: Geoffrey Hinton, Noble Prize winner and widley regarded as being the “Godfather of AI” has spoken about a nice thought experiment, see here, to showcase how AI chatbots can be shown to have subjective experiences. Thus following from my arguments prior this should sway the argument towards the direction of these systems being potentially conscious (making reaching “the singularity” less implausible).

Ensuring Truth & Safety

Despite the risks, there are a few high-level steps we can take to keep chatbots trustworthy and safe:

-

Building Trustworthy AI: Developers can implement content moderation filters, real-time fact-checking, or disclaimers that remind users to verify information. Some newer chatbot systems might even cite their sources.

-

Regulation & Governance: Governments, institutions, and educational boards can set guidelines for how AI is used in schools. Maybe that means requiring parental consent or limiting the data a chatbot can collect from students.

-

Teaching Digital Literacy: This is perhaps the most important. Just like we teach kids to spot fake news, we can teach them to critically evaluate chatbot responses. The key is blending AI tools into lesson plans that emphasize thoughtful inquiry, not blind acceptance.

It’s worth noting that the area of safety is a top concern of members of the AI research community. Many labs and big companies producing models have AI Safety & Alignment teams working on providing guidance and best practices on this. Additionally, there are entire organizations dedicated to AI safety, such as the AI Safety Institute, Alignment Research Center, and the Center for Humane Technology. These organizations focus on developing frameworks and guidelines to ensure that AI technologies are developed and deployed responsibly, minimizing risks and maximizing benefits for society.

Fun Fact: Co-Founder and CEO of Anthropic Dario Amodei has mentioned publically Anthropic was founded on the basis of “building AI sytems safely in a way that’d benefit humanity”. He alongside other researchers speculated, long before it was common knowledge, that safety would be important and so spent time trying to get peoples attention by collating together a list of “Concrete Problems in AI Safety” which in hindsight haven’t held up that well in terms of addressing the right problems (according to co-author Chris Olah) but still was a worthwhile consensus building excercise at the time, which years later has helped give rise to the field of AI Safety research. You can see the discussion here.

Connecting the Dots: Chatbots & Education

So why should teachers care about all this? In a nutshell, chatbots could save time, personalize learning, and spark excitement—if used responsibly. Here are some ways they might help:

-

Quick Feedback: An AI chatbot can point out errors in a student’s math solution or essay. This frees teachers to focus on more in-depth discussions or one-on-one support.

-

Interactive Learning: Chatbots can provide “drill” exercises or real-time quizzes, turning routine practice into an engaging conversation.

-

Lesson Planning & Resources: Some teachers use AI-based tools to find new resources, worksheets, or lesson ideas. A well-informed chatbot could become an on-demand repository of teaching materials.

The goal is to see chatbots as complements rather than replacements. They can do the repetitive grunt work or give a second set of eyes on a lesson, allowing educators to devote more energy to creativity, empathy, and deeper engagement.

Conclusion

Chatbots have evolved from simple curiosity to an integral part of our digital lives. In the context of education, they hold enormous promise—as long as we remain cautious about risks like misinformation, data privacy, and overreliance. By teaching students to be critical consumers of AI outputs, and by setting responsible guidelines at the school or district level, we can harness chatbot technology to enrich learning experiences.

The big takeaway? Don’t fear the bot—just treat it with the same discernment you’d treat any new classroom tool. Ask questions, do some testing, and decide where it best fits your teaching style and students’ needs.

Further Resources

- Examples of AI Chatbots

- ELIZA Chatbot Online – An interactive version to see how primitive (yet groundbreaking) chatbots first worked.

- Duolingo – A fun language-learning app that uses chatbot-like interactions.

- AI in Education Research – OECD’s AI policy observatory with articles and data relevant to AI in schools.

- Khanmigo - Integrated as part of the Khan Academy Learning platform.

- Technical details of modern chatbots

- Inner workings of ChatGPT - Post describing the inner workings of ChatGPT and alike chatbots.

Whether you’re a teacher, a parent, or simply curious about the latest trends in technology, understanding chatbots can give you a window into the broader world of AI. Let’s keep the conversation going and shape a future where these tools empower both educators and learners.