Introduction

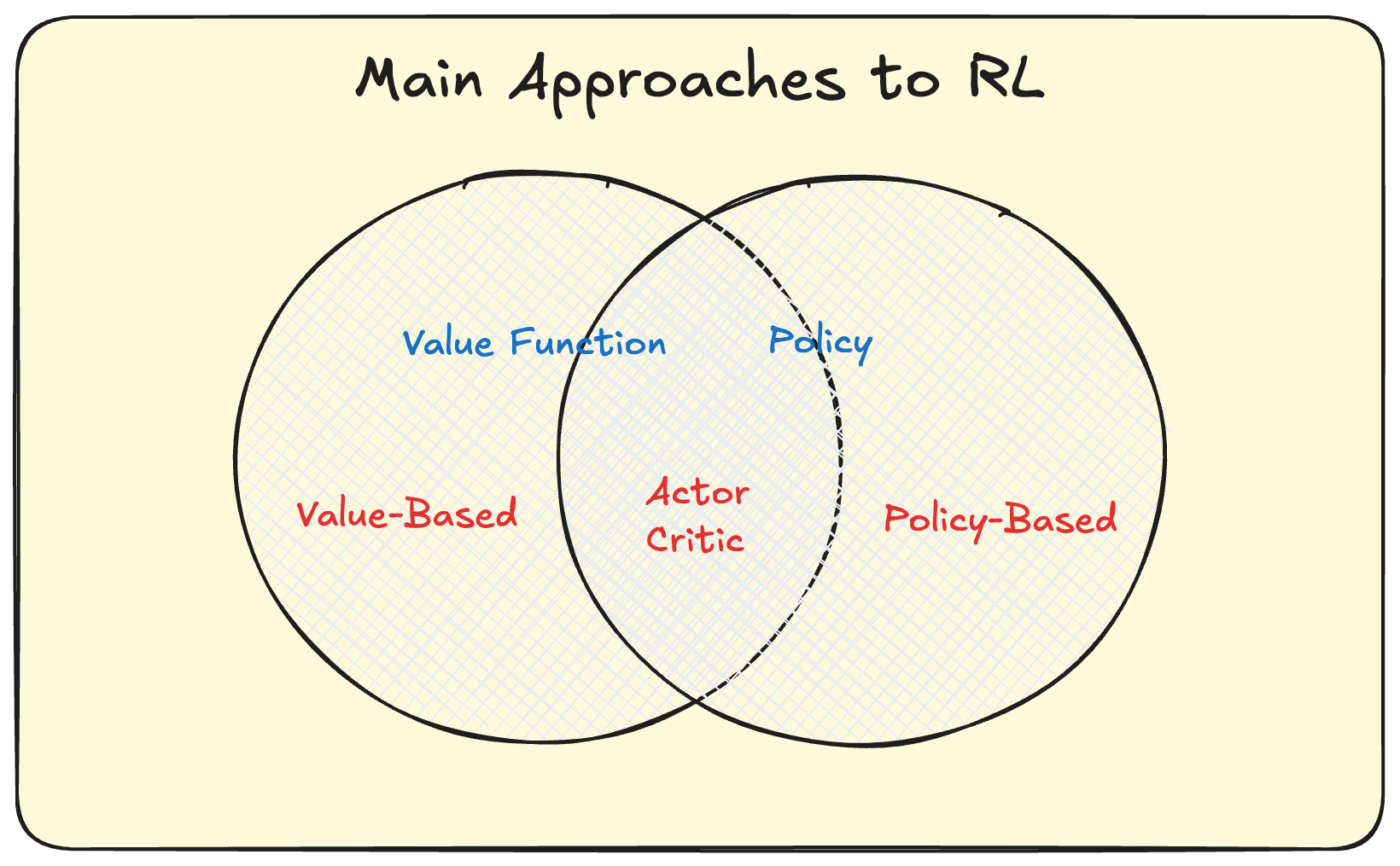

Actor-Critic methods are a type of reinforcement learning algorithm that combine the benefits of both value-based and policy-based approaches. This blog post aims to provide a high-level overview of these methods.

Background

Reinforcement Learning (RL) is a type of machine learning where an agent learns to make decisions by interacting with an environment. RL methods can be broadly categorized into value-based and policy-based methods. In previous posts, we have discussed policy gradient algorithms along with a brief intro on RL, see here.

Actor-Critic Approach

The core idea of Actor-Critic methods is to combine an “actor” (policy) and a “critic” (value function) where the goal of the actor is to select actions given a state and the critic is to “critque” the choice actions taken by the policy.

More conceretly there are three main approaches to reinforcement learning: value-based, policy-based, and actor-critic methods. In value-based learning, we learn a value function \(Q_\theta(s, a)\) and infer a policy through maximization: $ \pi(s) = \arg\max_a Q(s, a) $. This approach uses an implicit policy. Policy-based learning takes a different route by explicitly learning the policy $ \pi_\theta(a \vert s) $ that maximizes reward across all possible policies, without maintaining a value function. Actor-critic methods combine both approaches by simultaneously learning both a value function and a policy, leveraging the benefits of each approach.

Role of the Actor

- Learning the optimal policy.

- Taking actions in the environment.

Role of the Critic

- Evaluating the actions taken by the actor.

- Providing feedback to the actor.

Advantages of Actor-Critic Methods

Combining policy and value-based approaches allows Actor-Critic methods to address some limitations of each individual approach, providing a more robust learning framework.

The motivation for using Actor-Critic methods stems from the challenges inherent in both value-based and policy-based RL. As Vapnik famously stated, “When solving a problem of interest, do not solve a more general problem as an intermediate step.” In the context of value-based RL, this suggests that learning a value function can be unnecessarily complex, especially when a simpler policy would suffice. On the other hand, pure policy gradient methods, such as REINFORCE, often suffer from high variance.

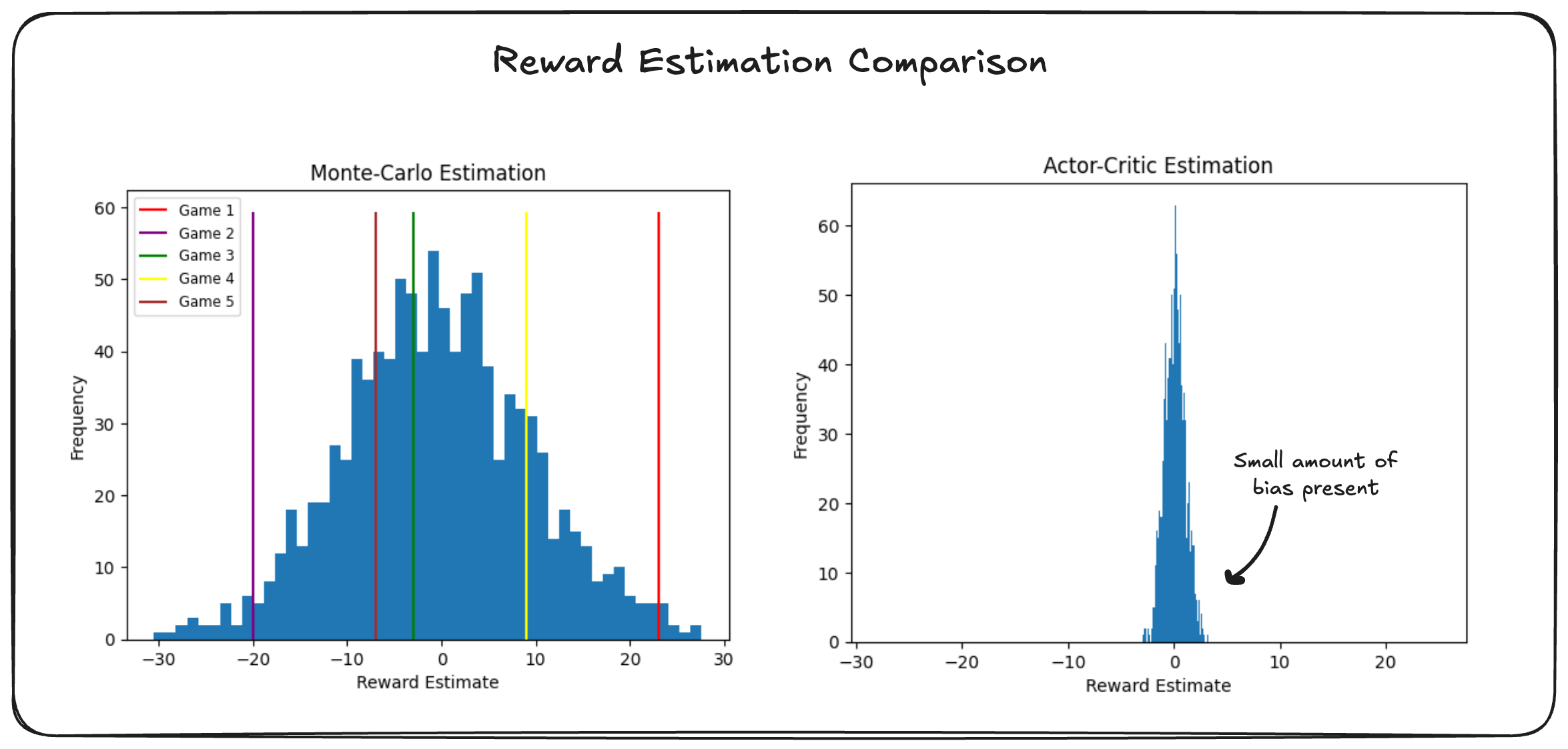

This variance issue arises because policy gradients estimate the expected reward by playing a game under the current policy and recording the states, actions, and rewards encountered. While this Monte-Carlo sampling approach provides an unbiased estimate, it can lead to high variance, meaning the policy updates might move in suboptimal directions. This in turn can make things even harder to recover from since you then repeat the process with the updated policy which is now even further from the truth.

To illustrate, imagine playing $ N = 1000 $ games where the game is formalised as an rl problem and plotting a histogram of the observed rewards; this typically will result in a wide spread which indicates high variance. If you were to then select a sample of rewards e.g $N = 5$ you’d likely notice a high degree of variance between them.

Actor-Critic methods aim to alleviate this variance by incorporating a critic to provide more stable and efficient feedback to the actor. Thinking of this interms of the game anaology above would be like observed a distribution of observed reward which has a slight bias yet more peaked and thus lower variance.

Don’t get too hung up on the exact choices of the plots themselves they’re just shown to illustrate things.

Note: You can think of this stemming from the fact that the sample mean, used to estimate the expected reward, has its own expectation $\mathbb{E}[\bar{X}]$ and variance $\text{Var}(\bar{X})$. While increasing the number of games $ N $ can reduce the variance, it also increases the computational cost. Actor-Critic methods offer a way to reduce variance without such a steep increase in computation.

Key Components

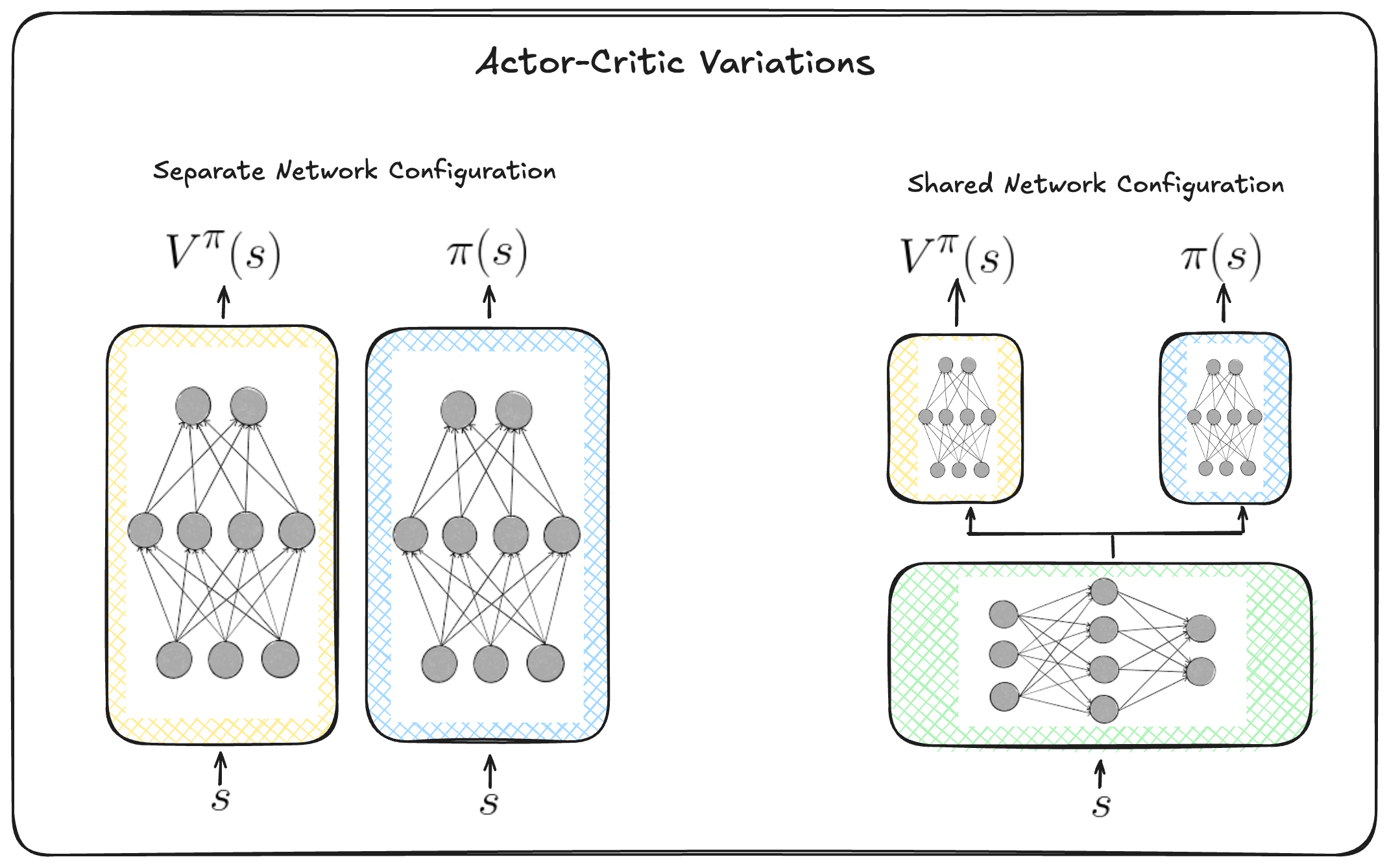

Actor Network

- Architecture: Typically a neural network.

- Function: Parameterizes the policy.

Critic Network

- Architecture: Typically a neural network.

- Function: Estimates the value function (e.g., Q-value or state-value).

Sample implementation, full code here:

import torch.nn as nn

import torch.nn.functional as F

class Policy(nn.Module):

"""

implements both actor and critic in one model

"""

def __init__(self):

super(Policy, self).__init__()

self.affine1 = nn.Linear(4, 128)

# actor's layer

self.action_head = nn.Linear(128, 2)

# critic's layer

self.value_head = nn.Linear(128, 1)

# action & reward buffer

self.saved_actions = []

self.rewards = []

def forward(self, x):

"""

forward of both actor and critic

"""

x = F.relu(self.affine1(x))

# actor: choses action to take from state s_t

# by returning probability of each action

action_prob = F.softmax(self.action_head(x), dim=-1)

# critic: evaluates being in the state s_t

state_values = self.value_head(x)

# return values for both actor and critic as a tuple of 2 values:

# 1. a list with the probability of each action over the action space

# 2. the value from state s_t

return action_prob, state_values

Algorithm Flow

- The actor takes an action based on the current policy.

- The critic evaluates the action and provides feedback (e.g., TD error).

- The actor updates its policy based on the feedback from the critic.

- The critic updates its value function to better estimate future rewards.

Variants of Actor-Critic Methods

- A2C (Advantage Actor-Critic)

- A3C (Asynchronous Advantage Actor-Critic)

- DDPG (Deep Deterministic Policy Gradient)

- TD3 (Twin Delayed DDPG)

- SAC (Soft Actor-Critic)

Applications

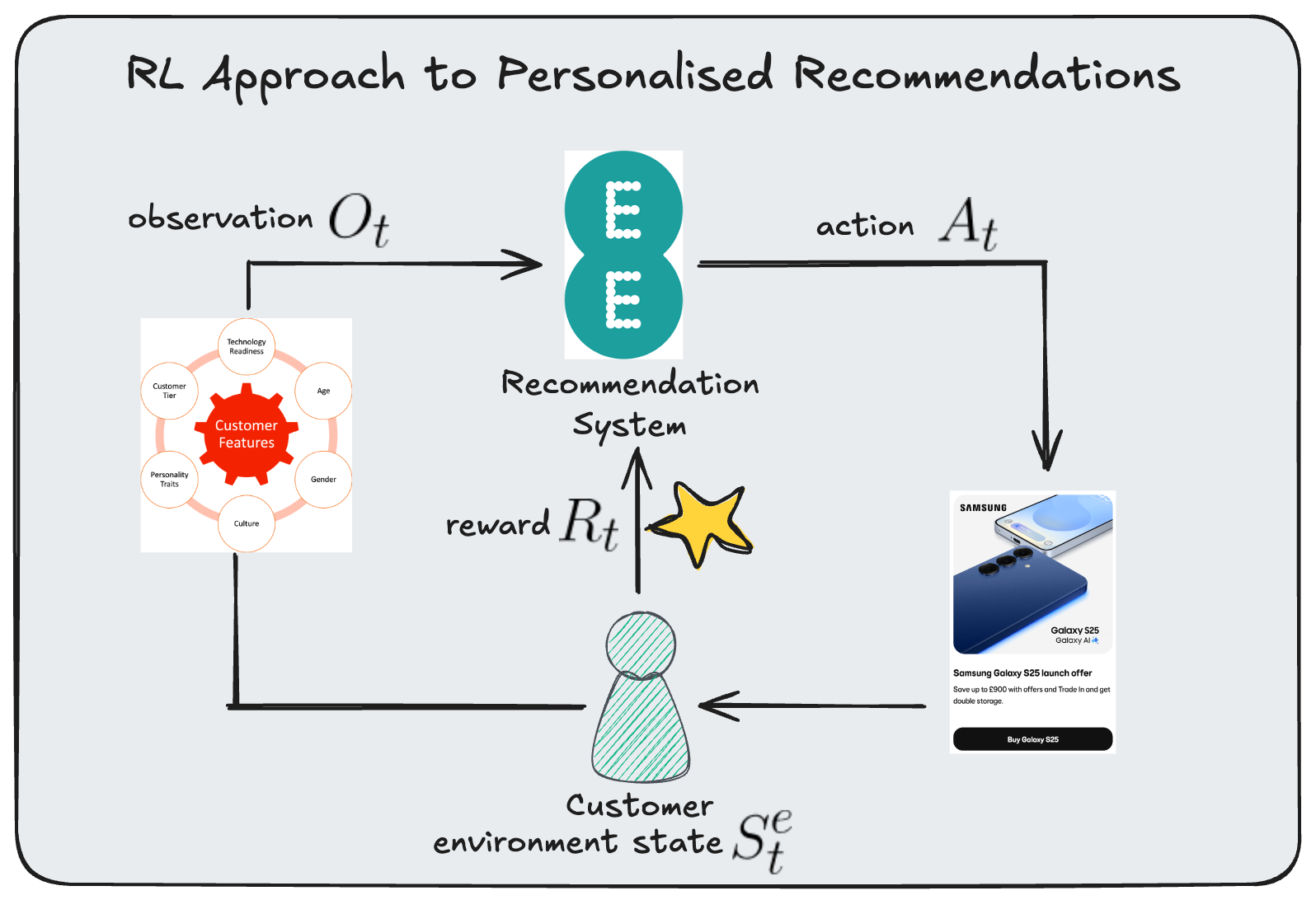

Actor-Critic methods are used in various real-world applications, including robotics, game playing, and autonomous driving and most notably for providing personalised recommendations to customers.

Conclusion

Actor-Critic methods combine the strengths of value-based and policy-based approaches, making them a powerful tool in reinforcement learning.

Further Resources

- Research Papers

- Foundational Papers

- Barto, Sutton & Anderson (1983): Actor-Critic Architecture

- Sutton et al. (2000): Policy Gradient Methods

- Deep RL Advances

- Mnih et al. (2016): A3C

- A breakthrough in deep RL. A3C trained multiple actor-critic agents in parallel threads, achieving state-of-the-art results on Atari games and continuous control tasks. This paper demonstrated the stability and efficiency gains from using an actor-critic with multi-threaded training.

- Lillicrap et al. (2015): DDPG

- An actor-critic algorithm for continuous action spaces. DDPG uses a parametric actor (policy network) and a critic (Q-value network), along with experience replay and target networks (inspired by DQN), to learn continuous control policies (e.g. for robotic locomotion). It became a foundation for many continuous RL methods.

- Schulman et al. (2017): PPO

- PPO is a widely used policy optimization method that can be seen as an actor-critic approach (with a clipped surrogate objective) for stability. It simplifies earlier trust-region methods while achieving robust performance. PPO’s popularity in the RL community is due to its ease of use and reliability on a variety of benchmarks.

- Haarnoja et al. (2018): SAC

- Introduced Soft Actor-Critic, an off-policy actor-critic algorithm that maximizes a trade-off between expected reward and entropy. SAC’s stochastic actor aims for both high reward and high randomness (exploration), leading to state-of-the-art results in continuous control. This represents a recent advancement in actor-critic methods, improving stability and sample efficiency.

- Mnih et al. (2016): A3C

- Application in Recommendation Systems

- Survey (Lin et al., 2021): Reinforcement Learning for Recommender Systems

- Zhao et al. (2019): Deep RL for List-wise Recommendations

- Chen et al. (2019, YouTube/Google): Top-K Off-Policy Correction

- Xin et al. (2022): Supervised Advantage Actor-Critic

- Hierarchical Actor-Critic for Recommendations: Recent Research

- Foundational Papers

- Articles, Tutorials, and Blogs

- Lilian Weng’s Blog: Policy Gradient Algorithms (Actor Critic)

- Hugging Face’s Deep RL Course: Actor-Critic Unit

- Chris Yoon’s Tutorial: Understanding A2C

- OpenAI Spinning Up: RL Documentation

- Great for learners trying to upskill more broadly in the field of deep rl. Includes readable introductions to policy gradient and actor-critic approaches. For example, it features sections on Vanilla Policy Gradient (REINFORCE), A2C/A3C, DDPG, PPO, and SAC, along with code snippets.

- Implementations and Code Examples